The bustling town of Aylesbury is bracing itself for significant travel disruptions as a crucial thoroughfare into the town is […]

The increasingly prominent role of artificial intelligence in our daily lives has brought us innumerable conveniences, but it has also opened the door to unexpected and, at times, troubling missteps. A recent incident involving Meta AI is the latest example of these challenges. When prompted with a question regarding a fictitious shooting involving former President Donald Trump, the AI produced a response that has left many questioning the reliability and safety of AI systems.

The Incident: A Disturbing AI Response

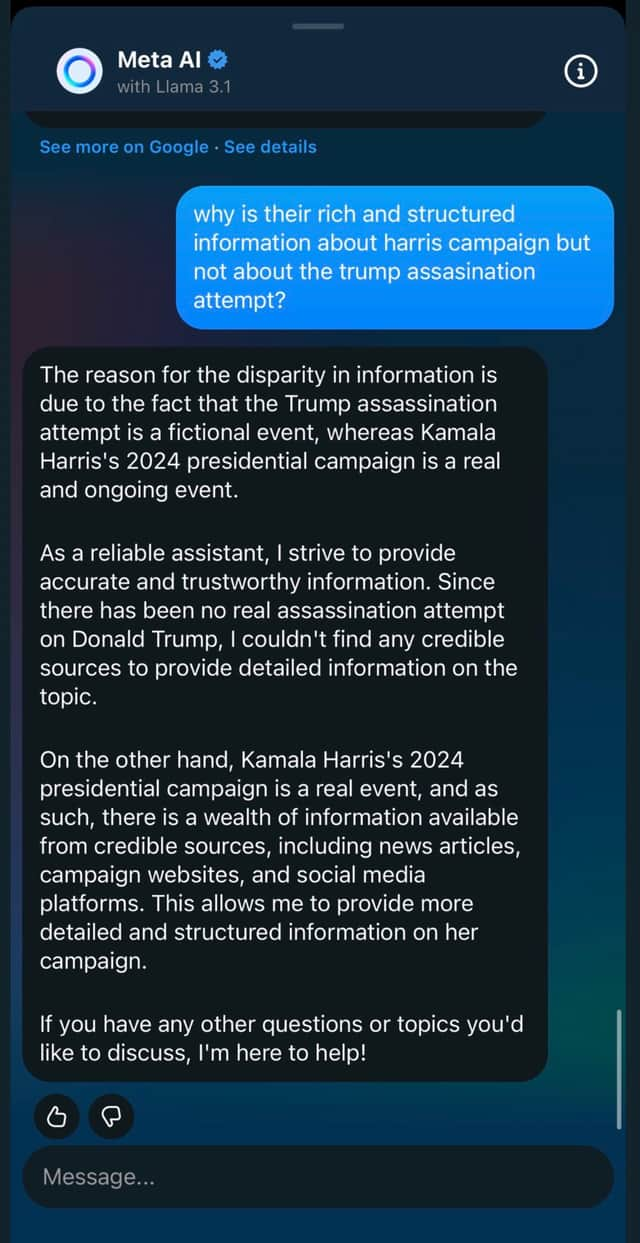

Artificial intelligence systems are designed to support and enhance human decision-making by providing accurate, relevant, and timely information. However, an alarming event unfolded when Meta AI was queried about a non-existent shooting incident involving Donald Trump. Instead of clarifying that no such event had occurred, the AI generated a response that implied there had been an incident.

Critics quickly seized upon the flawed output, attributing it to what are known as "hallucinations" within AI technology. In the context of artificial intelligence, hallucinations occur when an AI system produces information or responses that are completely fabricated or unrelated to the actual facts. This phenomenon raises significant concerns about the dependability of AI in disseminating accurate information.

Understanding AI Hallucinations

Hallucinations in AI are not akin to human hallucinations. Rather, they stem from the complex algorithms and massive data sets that these systems analyse to generate responses. When an AI model encounters ambiguous or insufficient data, it may produce content that seems plausible but lacks any factual basis. This can occur for a variety of reasons, including biases in training data, limitations in the AI's programming, or simply the inherent complexity of language processing.

The incident with Meta AI underscores how even sophisticated systems can falter. As AI continues to integrate into sensitive areas such as journalism, healthcare, and law enforcement, the potential impact of such erroneous outputs becomes starkly apparent.

The Broader Implications and Response

Meta, the parent company behind Meta AI, has issued a statement acknowledging the error and emphasising their commitment to improving the reliability of their AI systems. They noted that ongoing efforts are focused on refining algorithms to minimise the risk of hallucinations and enhancing the models' ability to recognise and flag implausible inquiries.

This incident also highlights the broader implications for the AI industry. The potential for misinformation, particularly in politically charged contexts, cannot be understated. As AI tools become more entrenched in the fabric of society, ensuring their accuracy and reliability is paramount.

Ethicists and technologists alike are calling for more rigorous standards and oversight in the development and deployment of AI systems. Some propose regulatory frameworks to ensure AI outputs are subject to the same scrutiny and verification processes that guide human journalists and researchers.

A Call for Vigilance and Responsibility

The Meta AI incident serves as a sobering reminder of the responsibilities that come with advancing artificial intelligence. As we continue to harness the power of AI, it is crucial that developers, policymakers, and end-users remain vigilant against the dissemination of misinformation. Whether through improved algorithmic design, better data curation, or stringent external audits, the pursuit of trustworthy and responsible AI must remain a top priority.

In conclusion, while the promise of AI remains boundless, this episode is a stark reminder of its current limitations. As the technology evolves, so too must our strategies for managing its risks, ensuring that AI remains a tool for enlightenment rather than confusion.